Consciousness and Informational Access

Consciousness is a strange phenomenon. We experience the world, we feel things. And yet we can never feel what others feel. Nor what dogs feel, or trees, or rocks, or computers, should any of those objects even have feelings at all. And as we study the brain, the one thing strongly correlated with feelings, there are no feelings to be found. Instead we find neurons, molecules, and atoms. Which leads to a question: if we know for sure that we are conscious via our own minds, but cannot point out what is and isn’t conscious when studying the material world, then what is consciousness and how are we conscious?

But perhaps the explanation need not be so difficult, if we assume one, maybe strange, property: informational access. A property that comes about wherever information is being analyzed, recognized, and responded to. As we will see, in most systems this access is rather narrow, but it can be a broad kind of access. And it is this broad access that our brains leverage, because it is key to our ability to plan, think, and learn. And when we situate this broad informational access in a brain like ours, it becomes clear why certain aspects of consciousness look so mysterious.

Access

Lets first explore informational access through the example of an analog thermostat. Such a thermostat evaluates an informational model something like this: “heater on = room temperature < user temperature”. Should the thermostat not have access to information about the temperature in a room, it won’t be able to evaluate this model, thus it won’t be able to function properly. However, the thermostat does not have informational models about rooms, or users, or heaters. Those labels we plug into the model, because we know its larger context. A thermostat, lacking that context, is only evaluating “a = b < c”. Moreover, it doesn’t even have that model available as information, instead it implements that model through a clever arrangement of mechanical parts.

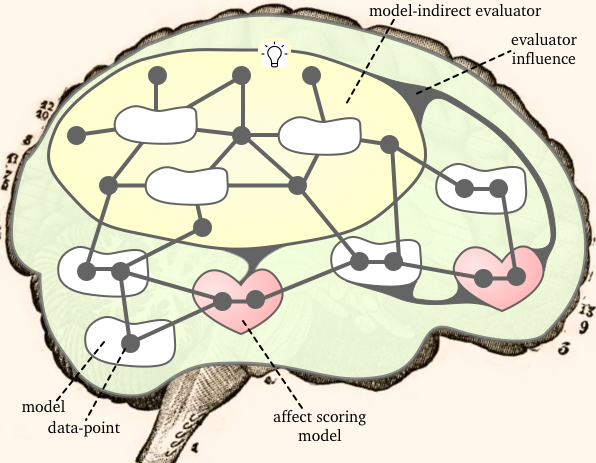

What is true for the thermostat, is true for most systems that process information: that neither the implemented model, nor any broader context, is accessible to the implementation. Much of our brains function the same way, except the part responsible for planning and reasoning. This part adds an indirection: it processes models that process information. And the models are as much part of its repertoire as the information they process. For example: when you contemplate a certain problem, you might think of a solution. At that point you can take a step back, see how you came to that solution, what mental models you used, if the reasoning is valid, and how to explain it best to others. This stepping back, this access to context, is key to our intelligence. It allows us not just to process information, but to correct existing models, to learn new models, and even to improve the models responsible for learning. And this is how the model-indirect part of our brain has broad informational access, a property that we call awareness, and in the literature is known as access consciousness.

Two Mysteries

And I would propose that this broad informational access is all that we have. Because when we situate that in the rest of the brain, and look at things from its perspective, we see that two strange things must be going on. First, much information just appears, not explicable, not correctable, and lacking context. Because it did not originate in the model-indirect system. Second, what to focus on, what is important, what is positive, what is negative, is not yet more information for the model-indirect system, but is other parts influencing how brain overall functions. Indeed, from the perspective of our minds, our thoughts just appear; object are just recognized in our visual field; we just know the next song in a playlist. While our emotions and motivations can influence what we perceive; can change our focus; and can even render us (somewhat) irrational.

Virtual

On this view, feelings are virtual. They exist only by virtue of how the brain is organized, and by what effects they have on the various other systems in the brain. We become consciously aware of our feelings when they push and pull on the model-indirect system, or when we pick them up through changes in our physiology. To some, such a view implies that feelings are not real, or that feelings don’t really matter. Quite the opposite is true. It is exactly because feelings, like love or pain, should matter to physical beings like us, that evolution has wired them into our brains such that they are near impossible to escape. Moreover, that feelings are virtual, explains why they are not to be found in neuronal activity. Just like how wetness cannot be found in a computer running a rainfall simulation. Feelings, like virtual rain drops, only have effects on other things going on inside these systems. On the outside, at best, physical activity can be correlated to the descriptions of what goes on in the inside.